Workload Manager

| Main | EGI.eu operations services | Support | Documentation | Tools | Activities | Performance | Technology | Catch-all Services | Resource Allocation | Security |

| Tools menu: | • Main page | • Instructions for developers | • AAI Proxy | • Accounting Portal | • Accounting Repository | • AppDB | • ARGO | • GGUS | • GOCDB |

| • Message brokers | • Licenses | • OTAGs | • Operations Portal | • Perun | • EGI Collaboration tools | • LToS | • EGI Workload Manager |

Overview

EGI Workload manager is a service is provided to the EGI community as a workload management service used to distribute the users' computing tasks among the available HTC and cloud compute resources.

The service includes a DIRAC instance that is pre-configured to a number of HTC and Cloud resource pools of EGI, and the ability to connect additional resource pools to it for new communities. The service is coordinated by the EGI Foundation and operated by IN2P3 on servers provided by CYFRONET.

| Tool name | Workload Manager |

| Tool Category and description | A workload management service used to distribute the users' computing tasks among the available resources both HTC and cloud. |

| Tool url | dirac.egi.eu |

| dirac-support@mailman.egi.eu, dirac@mailman.egi.eu, dirac-admins@mailman.egi.eu (operation team) | |

| GGUS Support unit | DIRAC |

| GOC DB entry | GRIDOPS-DIRAC4EGI |

| Requirements tracking - EGI tracker | technical-support-cases, dirac4egi-eiscat3d-requirements, dirac4egi-mobrain-requirements |

| Issue tracking - Developers tracker | https://github.com/DIRACGrid/DIRAC/issues |

| Release schedule | |

| Release notes | https://github.com/DIRACGrid/DIRAC/wiki#release-information |

| Roadmap | |

| Related OLA | https://documents.egi.eu/document/3254 |

| Test instance url | https://dirac.egi.eu/ |

| Documentation | https://wiki.egi.eu/wiki/Workload_Manager |

| License | GNU General Public License v3.0 |

| Provider | IN2P3

CYFRONET |

| Source code | https://github.com/DIRACGrid/DIRAC |

Main features

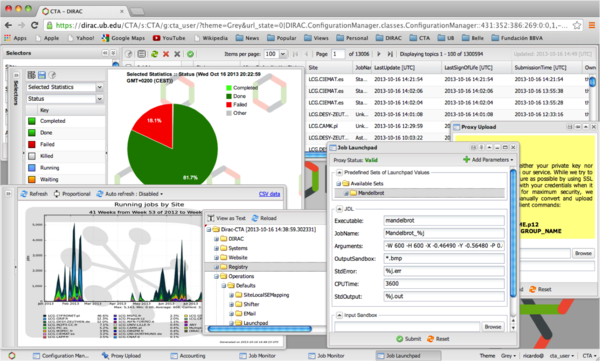

Workload Manager provides high user job efficiency for using EGI High Throughput Computing resources (Grid and Cloud). It improves the general job throughput compared with native management of EGI grid or cloud computing resources. The service based on DIRAC technology, that has proven production scalability up to peaks of more than 100 thousand concurrently running jobs for the LHCb experiment.

- Pilot-based task scheduling method, that submits pilot jobs to resources to check the execution environment. The user job description is delivered to the pilot, which prepared its execution environment and executes the user application. This solves many problems of using heterogeneity and unstable distributed computing resources. Users' job is starting in an already verified environment.

- The environment checks can be tailored for specific needs of a particular community by customising the pilot operations. Users can choose appropriately computing and storage resources maximising their usage efficiency for particular user requirements

- All the Workload Manager functionality is accessible through friendly user interfaces, including a Web Portal. It has an open architecture and allows easy extensions for the needs of particular applications.

Target User Groups

The service suits for the established Virtual Organization communities, long tail of users, SMEs and Industry

- EGI and EGI Federation participants

- Research communities

This service platform eases scientific computing by overlaying distributed computing resources in a transparent manner to the end-user. For example, WeNMR, a structured biology community, uses DIRAC for a number of community services, and reported an improvement from previous 70% to 99% with DIRAC job submission. The benefits of using this service include but not limited to :

- Maximize usage efficiency by choosing appropriately computing and storage resources on real-time

- Large–scale distributed environment to manage and handle data storage, movement, accessing and processing

- Handle job submission and workload distribution in a transparent way

- Interoperable, handle different storage supporting both cloud and grid capacity

- User-friendly interface that allows to choose among different DIRAC services

There are several options to access the service:

1. Member of a community whose resource pool is already configured in the DIRAC4EGI instance -->Use the DIRAC4EGI web portal to access the service, or use DIRAC Client

2. Individual researchers who want to do some number crunching for a limited period of time, with a reasonable (not too high) number of CPUs. --> Use the access.egi.eu resource pool (where DIRAC will be hopefully connected). Submit a request for this (UCST to check identity and justification of use)

3. Representative of a community who want to try DIRAC and EGI. --> Same as #2.

4. Representative of a community who want to request DIRAC for the community's own resource pool --> Submit a request via the Makretplace, UCST to call back and discuss the details (which resource pool, what number of users, AAI, etc.)

Getting started

How to request the service: Submit a service request via the EGI website/marketplace: https://marketplace.egi.eu/compute/73-workload-manager-beta.html

The EGI User Community Support Team contacts CNRS to request the support the service integration (on-boarding of the new customer)

- User documentation:

- Support Unit FAQ

Technical Service Architecture

DIRAC was originally developed to support the production activities of the LHCb experiment at CERN (~10 years ago), today it acts as is a general purpose software support for Grid, Cloud, HPC, targeting various large scientific communities including LHCb, Belle II, EGI, CTA, GridPP, WeNMR, VIP, FranceGrilles, SKA, VIRGO, etc. DIRAC provides complete solutions for production managements, handling distributed large scale of scientific data and optimising job executions.

The DIRAC framework offers standards rules to create DIRAC extension, large part of the functionality is implemented as plugins and it allows to customize the DIRAC functionality for a particular application with minimal effort. It provides multiple commands usable in a Unix shell giving access to all the DIRAC functionalities. It also provides a RESTful API suitable for use with application portals (e.g. WS-PGRADE portal is interfaced with DIRAC this way) and a Python programming interface is the basic way to access all the DIRAC facilities and to create new extensions. For all DIRAC service please check it out at DIRAC documentation

The Workload Manager service (DIRAC4EGI) is a cluster of DIRAC service running on EGI resources (HTC, CLOUD, HPC) supporting multi-VO. The main service components include:

- Workload Management System (WMS) architecture is composed of multiple loosely coupled components working together in a collaborative manner with the help of a common Configuration Services ensuring reliable service discovery functionality. Modular architecture allows to easily incorporate new types of computing resources as well as new task scheduling algorithms in response to evolving user requirements. DIRAC services can run on multiple geographically distributed servers which increases the overall reliability and excellent scalability properties.

- REST server providing language neutral interface to DIRAC service

- Web portal provides simple and intuitive access to most of the DIRAC functionalities including management of computing tasks and distributed data. It also has a modular architecture designed specially to allow easy extension for the needs of particular applications.

and all DIRAC service are at or above TRL8

The modular organiszation of the DIRAC components allows selecting a subset of the functionality suitable for particular applications or easily adding the missing functionality. These are very useful for communities to have a customised environments to handling own data.

Use Cases

WeNMR

WeNMR is a worldwide e-Infrastructure for NMR and structural biology supported by EGI. It provides a portal integration commonly used software for NMR and SAXS data analysis and structural modelling. It is one of the largest Virtual Organisation in life science.

WeNMR has adopted the Workload Manager service and achieved higher performance and reliability, (96% reliability comparing to 78% when using the regular gLite-based submission). For details, refer to http://indico3.twgrid.org/indico/getFile.py/access?contribId=61&sessionId=20&resId=0&materialId=slides&confId=593. New development (since Jan 2018) is supported by the WeNMR Thematic Service under the EOSC-hub umbrella.

EISCAT-3D

EISCAT is an international scientific association that conduct ionospheric and atmospheric measurements with radars. The new generation EISCAT 3D radar is under construction. Once assembled, it will be a world-leading infrastructure using the incoherent scatter technique to study the atmosphere in the Fenno-Scandinavian Arctic and to investigate how the Earth's atmosphere is coupled to space.

EISCAT and EGI set up a Competence Centre (CC) in the context of the EGI-Engage project to provide researchers with data analysis tools to improve their scientific discovery opportunities.

The team developed a web portal for researchers to to discover, access and analyse the data generated by EISCAT_3D. The CC opted to use the EGI Workload Manager service.

The service provides a web-based graphical interface and command line interface to interact with data search and job management. The system also facilitates the development of data models and modelling tools within the EISCAT_3D community, and the applicability of operating a central portal service for scientists to interact and compute with EISCAT data. https://wiki.egi.eu/wiki/Competence_centre_EISCAT_3D#First_portal_-_proof_of_concept

New development (by Jun 2018) is supported by the EISCAT-3D CC under the EOSC-hub umbrella.

VIRGO

Virgo is a giant laser interferometer designed to detect gravitational waves and located at the European Gravitational Observatory (EGO) site in Cascina, a small town near Pisa. Virgo was designed and built by a collaboration between the French National Center for Scientific Research (CNRS) and the National Institute for Nuclear Physics (INFN). It is now operated and improved by an international collaboration of scientists from France, Italy, the Netherlands, Poland, and Hungary. In 2017, the Virgo and LIGO Scientific Collaborations received the Physics Nobel Prize for their role in the detection of gravitational waves.

Virgo is now performing tests using the EGI workload manager service. The fact that DIRAC is already used by many communities as a mature tool was an important factor in making this decision. In addition to the EGI Workload Manager, the Virgo collaboration also decided to test distributed data management solution to better understand its potential. Considering the Data Management needs of Virgo, it was agreed to set-up a dedicated DIRAC file catalog component as well, hosted at the INFN data centre in Bologna, Italy.

The tests conducted so far (by Jun 2018) showed good performance results. For example, the catalog was populated with millions of records, and the performances were good even with a large number of records similar to the real numbers that are expected to be in production. The tests also allowed to find and fix some misconfigurations on the resource centres currently available in France, Italy, and the Netherlands. In the following months, more sites will be involved and there are plans to move and register the production data between the sites, using the DIRAC data transfer feature.

References

- DIRAC documentation: http://dirac.readthedocs.io/en/latest/

- DIRAC gitHub source: https://github.com/DIRACGrid

- DIRAC Virtual Research Environment pilot for EGI workshop, https://indico.egi.eu/indico/event/1994/session/19/#20140522

- DIRAC4EGI webinar 2016: https://indico.egi.eu/indico/event/2978/

- DIRAC4EGI presentation at the 6th DIRAC User Workshop 2016 : https://indico.cern.ch/event/477578/contributions/2168291/

- DIRAC4EGI presentation at the 7th DIRAC User Workshop 2017: https://indico.cern.ch/event/609507/contributions/2577162/

- DIRAC4EGI presentation at the 8th DIRAC User Workshop 2018: https://indico.cern.ch/event/676817/contributions/2770700/