HBP

Community Information

Community Name

Human Brain Project

Community Short Name if any

HBP

Community Website

http://www.humanbrainproject.eu

Community Description

The aim of the Human Brain Project (HBP) is to accelerate our understanding of the human brain by integrating global neuroscience knowledge and data into supercomputer-based models and simulations. This will be achieved, in part, by engaging the European and global research communities using six collaborative ICT platforms: Neuroinformatics, Brain Simulation, High Performance Computing, Medical Informatics, High Performance Computing, Neuromorphic Computing and Neurorobotics.

Community Objectives

In the United States, the Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiative aims to accelerate the development of new technologies to create large-scale measurements of the structure and function of the brain. The aim is to enable researchers to acquire, analyze and disseminate massive amounts of data about the dynamics nature of the brain from cells to circuits and the whole brain.

For the HBP Neuroinformatics Platform, a key capability is to deliver multi-level brain atlases that enable the analysis and integration of many different types of data into common semantic and spatial coordinate frameworks. Because the data to be integrated is large and widely distributed an infrastructure that enables “in place” visualization and analysis with data services co-located with data storage is requisite. Providing a standard set of services for such large data sets will enhance data sharing and collaboration in neuroscience initiatives around the world.

Main Contact Institutions

Center for Brain Simulation, Campus Biotech chemin des Mines, 9, CH-1202 Geneva, Switzerland

Main Contact

- Sean Hill (sean.hill@humanbrainproject.eu, +41 21 693 96 78)

- Jeff Muller (jeffrey.muller@epfl.ch )

- Catherine Zwahlen (catherine.zwahlen@epfl.ch )

- Stiebrina Dace (dace.stiebrina@epfl.ch, Secretary )

Collaborations with EGI Engage Open Data Platform

Scientific challenges

Open Data Challenges in HBP

- Not black and white, not just OPEN or CLOSED, need granularity to be explicit about what is open, when and for what purpose, then gradually develop the culture of loosening these restrictions.

- Willing to share data but expensive to produce (intellectual capital-experimental design, acquisition cost, time); many possible uses (multiple research questions) for large datasets; currently, reward currencies are intellectual advances, publications and citations; No clear reward or motivation for providing data completely free of any constraint

- Willing to share data, need to provide incentives for contributions; establish common data use agreements; adopt common metadata, vocabularies, data formats/services; streamlining deployment of infrastructure to data sources (heterogeneous data access methods/ authentication/authorization); deploying data-type specific services attached to repositories

Objectives

Use Story I – Remote interactive multiresolution visualization of large volumetric datasets

Large amounts of image stacks or volumetric data are produced daily at brain research sites around the world. This includes human brain imaging data in clinics, connective data in research studies, whole brain imaging with light-sheet microscopy and tissue clearing methods or micro-optical sectioning techniques, two-photon imaging, array tomography, and electron beam microscopy.

A key challenge in make such data available is to make it accessible without moving large amounts of data. Typical dataset sizes can reach in the terabyte range, while a researcher may want to only view or access a small subset of the entire dataset.

An active repository The ability to easily deploy an active repository that combines large data storage with a set of computational services for accessing and viewing large volume datasets would address a key challenge present in modern neuroscience and across other domains.

Use Story II – Feature extraction and analysis of large volumetric datasets

Neurons are essential building blocks of the brain and key to its information processing ability. The three-dimensional shape of a neuron plays a major role in determining its connectivity, integration of synaptic input and cellular firing properties. Thus, characterization of the 3D morphology of neurons is fundamentally important in neuroscience and related applications. Digitization of the morphology of neurons and other tree-shape biological structures (e.g. glial cells, brain vasculatures) has been studied in the last 30 years. Recent big neuroscience initiatives worldwide, e.g. USA’s BRAIN initiative and Europe’s Human Brain Project, highlight the importance to understand the types of cells in nervous systems. Current reconstruction techniques (both manual and automated) show tremendous variability in the quality and completeness of the resulting morphology. Yet, building a large library of high quality 3D cell morphologies is essential to comprehensively cataloging the types of cells in a nervous system. Furthermore, enabling comparisons of neuron morphologies across species will provide additional sources of insight into neural function.

Automated reconstruction of neuron morphology has been studied by many research groups. Methods including fitting tubes or other geometrical elements, ray casting, spanning tree, shortest paths, deformable curves, pruning, etc., have been proposed. Commercial software packages such as Neurolucida also start to include some of the automated neuron reconstruction methods. The DIADEM Challenge ( http://diademchallenge.org/ ), a worldwide neuron reconstruction contest, was organized in 2010 by several major institutions as a way to stimulate progress and attract new computational researchers to join the technology development community.

A new effort, called BigNeuron (http://www.alleninstitute.org/bigneuron) aims to bring the latest automated neuron morphology reconstruction algorithms to bear on large image stacks from around the world.

The second use tory would entail deploying Vaa3D (www.vaa3d.org) as an additional service to the active repository described in Use Case I. Vaa3D is open source and provides a plugin architecture into which any type of neuron reconstruction algorithm can be adapted. The second use case would require additional computational resources (and could benefit from multithreaded and parallel compute resources) for the reconstruction process.

In this use case, a neuroscientist user would provide via a web service input parameters to a Vaa3D instance which would trace any recognized neuron structures using a selected algorithm. The output file would be returned via the webservice.

Expactations

Use Story I would require:

- A multi-terabyte storage capacity. Each image will typically range from 1-10TB.

- A compute node with fast IO bandwidth to storage device (to be specified shortly)

- The ability to deploy a Python-based service (BBIC, see appendix I) and supporting libraries (HDF5, etc).

- High performance internet connectivity for web service

- A standardized authentication/authorization/identity mechanism (first version could provide public access, current version uses HBP AAI).

- Web client code for interactively viewing dataset via BBIC service (provided by HBP)

- Modern web client (Chrome/Safari/Firefox) for interactive 2D/3D viewing using WebGL and/or OpenLayers.

- Sample neuroscience-based volumetric datasets including electron microscopy, light microscopy, two-photon imaging, light sheet microscopy, etc ranging from subcellular to whole brain – provided by HBP, OpenConnectome, Allen Institute and others.

Use Story II would require:

- The active repository developed for Use Case I.

- The additional deployment of Vaa3D adapted for use with BBIC. A beta version of this is currently available. The REST API may need development.

- A multiprocessor compute node with high speed access to the storage device.

- Additional datasets including image stacks/volumes of clearly labeled single or multiple neurons – provided by HBP, Allen Institute and others.

Impacts and Benefits

Use story 1 would enable a neuroscientist user to deploy their data in a specified repository where it would be accessible for web-based viewing and annotation.

Use story 2 would enable the building a large library of high quality 3D cell morphologies which is essential to comprehensively cataloging the types of cells in a nervous system. Furthermore, enabling comparisons of neuron morphologies across species will provide additional sources of insight into neural function.

Information Viewpoint

Data

- Represents in vivo, in vitro and in silico entities

- Represents observations

- Describes properties using ontologies

- Records where an entity or observation is located

- Tracks how data is produced

- Tracks who performed experiments/manipulations

Data size

Each image will typically range from 1-10TB

Data collection size

O(10PB) currently—will grow to O(1000PB) within next 5-10 years

Data format

Brian scans are stored in a form of: series of bitmaps,VTK (for 3d rendering), HDF5, TIFF/JPEG at origin, convert to HDF5 From the data structure point of view a single scan is either file or a directory of files.

Data Identifier

Current system has index to data and to metadata, searching facilities are provided allowing data discovery. Each dataset is associated with a global unique identifier and there are references (URIs) for multiple representations. For example, there is an entry for a unique dataset and its replica URIs, they link to a common GUID in the metadata system.

Standards in use

W3C PROV-O

Data Management Plan

- Tier 0 - Unrestricted

- All metadata and/or data freely available (includes contributor, specimen details, methods/ protocols, data type, access URL)

- Reward: Potential citation, collaboration

- Tier 1 - Restricted use

- Data available for restricted use, developing analysis algorithms

- Reward: Data citation

- Tier 2 - Restricted Use

- Data available for restricted use, nonconflicting research questions

- Reward: Co-authorship

- Tier 3 - Restricted use

- Full data available for collaborative investigation, joint research questions

- Reward: Collaboration, co-authorship

Privacy policy

Data use agreement

- Sets conditions for data use

- Don’t abuse privileges (e.g. deidentify human data)

- Don’t redistribute, go to approved repository for registered access (maintain data integrity, tracking accesses)

- Agree to acceptable use policies (e.g. investigate non-embargoed questions only)

- Embargo duration

- Share and share-alike - if data combined with others that should be shared, result should be shared

- Commercial use?

- Stakeholders

- Points to research registry for dataset

- Owners can reserve (embargo) data access for specific research questions for limited time period

- Others may access for non-embargoed use

Metadata

The metadata are important for finding the right scan in the global metadata repository. Current metadata of scans are: resolution, species, size of the file, number, etc.

Metadata format

Some metadata are included in the file but most of them are stored in JSON and XML file.

Standards in use

JSON & XML

Data LifeCycle

- Ingestion:

- Register unique identifiers for each contributor, specimen type, methods/protocols, data types, location, etc

- Mapping metadata for data objects to common HBP data model with provenance info

- Issuing persistent identifiers for data objects in each repository • Data registration REST-API

- Metadata harvesting

- Defining OAI-PMH with common HBP Core data model

- Add entry to KnowledgeGraph – semantic provenance graph

- Curation

- Registering spatial data to common spatial coordinates

- Data feature extraction/quality checks

- Update KnowledgeSpace Ontologies

- Augmenting ontologies for metadata (methods/ protocols/specimens, etc)

- Review article defining concepts w/data links

- Search

- Indexing to enable discovery of related (integrable) data

- Access

Technology Viewpoint

System Architecture

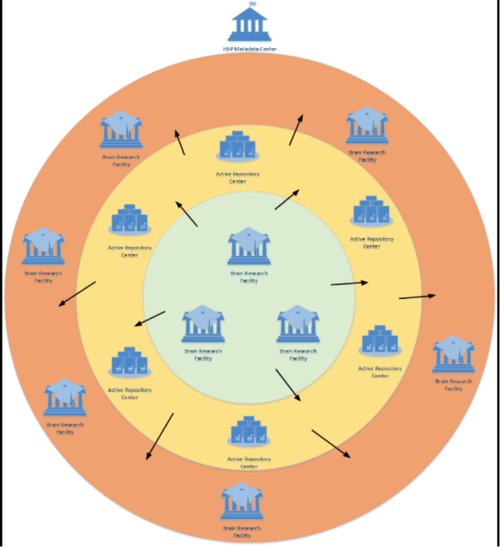

Brain Research Facility. There are large amounts of image stacks or volumetric data are produced daily at brain research sites around the world. This includes human brain imaging data in clinics, connectome data in research studies, whole brain imaging with light-sheet microscopy and tissue clearing methods or micro-optical sectioning techniques, two-photon imaging, array tomography, and electron beam microscopy.

Active Repository Center. The actual data from Brain Research Facility must be stored in data centers having respective storage solutions plus capacity for data processing.

Metadata Brain Center. Maintain the central HBP metadata repository. The repository is a kind of directory for all the available data in Active Repositories and it maintains as well LDAP of HBP members and OpenID interface for authentication.

Community data access protocols

The images are transferred manually to Active Repository Centers where they will be processed later. In many cases FTP protocol is involved. Brain Researchers upload the scans to ftp server.

Data management technology

BBIC (Blue Brain Image Container) (refere to HBP Volume Imaging Service.docx), which manages 2 data types:

- Stacks of images

- 3D volumes

Data access control

The interface processes data using POSIX api and produces significantly small amount of data in form of HTML+WebGL

Public data access protocol

HTTP queries

Public authentication mechanism

Data Owner: neuroscientists having administrative access to the data Current status: Human Brain Project maintains its own LDAP repository of users and groups. Based on that there is an OpenID provider which supports identification of the users. There must be an easy way to maintain ACL rights based on groups membership. Each scan or data object could have an individual ACL rights maintained by the users having respective permissions. → at the moment it is only accessible by authenticated users, including the user processing off-line → data owners decide the access controls

Requirements:

- System should be compatible with OpenID

- ACL

Non-functional requirements

| Performance Requirements | Requirement levels | Description |

|---|---|---|

| Availability | Nomal | process of migration data between active repositories if needed, that might be limited (→ next step requirement) |

| Accessibility | Normal | Simplicity of the access control (UC5) - not that important at the very beginning. |

| Utility | Middle | How difficult distribution/upgrading of the application software onto the environment will be. Docker approach is under HBP investigation now. |

| Reliability | Normal | How difficult distribution/upgrading of the application software onto the environment will be. Docker approach is under HBP investigation now. |

| Scalability | Middle | Scalability of processing load distribution/brokering, it should be easy (maybe automatic), instantiation of new processing units based on the traffic to active repositories. (UC2) |

| Efficiency | High | Simplicity of the process transferring data to the active repository site |

| Effectiveness | High | Simplicity of bringing up another active repository |

| Flexibility | High | Flexibility of accessing the same data by multiple scientists including intra-groups access |

| Decentralisaion | High | Decentralization of resource management. There are many collaborating groups but they remain independent. The system should be flexible to allow independently gain resources by those groups and still provide some integration level. |

Software and applications in use

Software/ applications/services

- BBIC (Blue Brain Image Container, a collection of REST services for providing imaging and meta-information extracted from BBIC files)

- Vaa3D (www.vaa3d.com), which provides a plugin architecture into which any type of neuron reconstruction algorithm can be adapted.

Runtime libraries/APIs

- BBIC is implemented in Python use Tornado web server; an alternative version use Python’s minimalist SimpleHTTPServer

- REST API

- HCL (Hyperdimensional Compression Library)

Requirements for e-Infrastructure

- PERFORMANCE. Being able to work at throughput 1GB/s per node to support 10 users (for image service nodes, throughput between the data server and the storage)

- STORAGE CAPABILITIES

- HDF5 files at size up to 10TB

- Posix access to data

- CO-LOCATION. CPU close to data

EGI Contacts

- Project Leader: Tiziana Ferrari, EGI.eu, tiziana.ferrari@egi.eu

- Technology Leader:Lukasz Dutka, Cyfronet, lukasz.dutka@cyfronet.pl

- Requirement Collector: Bartosz Kryza, Cyfronet, bkryza@agh.edu.pl

- Requirement Collector: Yin Chen, EGI.eu, yin.chen@egi.eu

Meeting and minutes

- Second technical discussion meeting for supporting the HBP use cases: VT Federated Data Meeting 16th Jun 2015 meeting minutes

- HBP discussion in EGI CF 2015 Lisbon, Towards an Open Data Cloud session: [1]

- Second Requirement collection meeting with HBP VT Federated Data Meeting 13th May 2015 meeting minutes

- First technical discussion meeting for supporting the HBP use cases VT Federated Data Meeting 7th May 2015 meeting minutes

- First Requirement collection meeting with HBP 21 Apr 2015 meeting page

Reference

- EGI Document Database Entry for HBP: https://documents.egi.eu/secure/AddFiles

- Active Repositories - HBP use cases .doc

- Lukasz's presentation on EGI 2015 HBP session .pptx

- Recording of EGI-HBP interaction meeting on 21 April 2015 .arf

- Requirement Extraction for Open Data Platform Project, information is filled in template .doc

- Requirements extraction from the meeting recording .doc

- Sean's keynotes talk on EGI 2015 .pdf

- Sean's presentation on EGI 2015 EGI-HBP discussion session .pdf

- Sean's presentation on EGI 2015 Open Data Cloud session .pdf

- Technique Analysis of the HBP requirements (Lukasz) google doc

- RT tickets for HBP https://rt.egi.eu/rt/Ticket/Display.html?id=8572