Difference between revisions of "Applications on Demand Service - architecture"

| (182 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

{{ | {{DeprecatedAndMovedTo|new_location=https://docs.egi.eu/users/applications-on-demand/}} | ||

{{Template:LTOS_menubar}} {{TOC_right}} | |||

<b>Applications on Demand (AoD) Service Information pages</b> | |||

The | The [https://www.egi.eu/services/applications-on-demand/ EGI Applications on Demand service] (AoD) is the EGI’s response to the requirements of researchers who are interested in using applications in a on-demand fashion together with the compute and storage environment needed to compute and store data. | ||

[https://marketplace.egi.eu The Service can be accessed through the EGI Marketplace]<br/> | |||

== Service architecture == | |||

The EGI Applications on Demand service architecture is presented in Figure. | |||

<!--[[Image:AoDs.png|center|600px|AoDs.png]] <br>--> | |||

[[Image:AoDs_architecturev2.jpg|center|600px|AoDs_architecturev2.jpg]] <br> | |||

* | This service architecture is composed by the following components: | ||

<!--* The <b>User Registration Portal (URP)</b> is a web portal which is used to authenticate users interested to access the Infrastructure. For the authentication, the portal relies on the EGI AAI CheckIn service which provides AAI services for both users and service providers. The EGI AAI CheckIn service supports different IdPs, eduGain, the international inter-federation service, and social credentials (e.g. Facebook, Google). During the authentication process, users can provide information about their contact, institutions and research topic. Such information will be taken into account by the operator(s) to evaluate whether the user is entitled to access and use the resources and the applications available in the Platform. This User Registration Portal (URP) is accessible at http://access.egi.eu--> | |||

* A <b>catch-all</b> VO called ‘vo.access.egi.eu’ and a <b>pre-allocated pool of HTC and cloud resources</b> configured for supporting the EGI Applications on Demand research activities. This resource pool currently includes cloud resources from Italy (INFN-Catania), Spain (CESGA) and France (IN2P3-IRES). | |||

*[ | * The <b>X.509 credentials factory service</b> (also called eToken server)[1] is a standard-based solution developed for central management of robot certificates and the provision of Per-User Sub-Proxy (PUSP) certificates. PUSP allows to identify the individual user that operates using a common robot certificate. Permissions to request robot proxy certificates, and contact the server, are granted only to Science Gateways/portal integrated in the Platform and only when the user is authorized. | ||

* A set of <b>Science Gateways/portal</b> to host applications that users can run accessing the EGI Applications on Demand service. Currently the following three frameworks are used: The FutureGateway Science Gateway (FGSG), the WS-PGRADE/gUSE portal and the Elastic Cloud Computing Cluster (EC3). | |||

* The <b>EGI VMOps dashboard</b> provides a Graphical User Interface (GUI) to perform Virtual Machine (VM) management operations on the EGI Federated Cloud. The dashboard introduces a user-friendly environment where users can create and manage VMs along with associated storage devices on any of the EGI Federated Cloud providers. It provides a complete view of the deployed applications and a unified resource management experience, independently of the technology driving each of the resource centres of the federation. Users can create new infrastructure topologies, which include a set of VMs, their associated storage and contextualization, a wizard-like builder that guides them through the selection of the virtual appliances, virtual organisation, resource provider, and the final customisation of the VMs that will be deployed. Its tight integration with the AppDB Cloud Marketplace allows for an automatic discovery of the appliances which are supported at each resource provider Once a topology has been created, VMOps allows management actions to be applied both on the set of VMs comprising a topology and on fine-grained actions on each individual VM. | |||

* The <b>EGI Notebooks</b>, build on top of JupyterHub offers an open-source web application where users can create and share documents that contain live code, equations, visualization and explanatory text. | |||

[1] Valeria Ardizzone, Roberto Barbera, Antonio Calanducci, Marco Fargetta, E. Ingrà, Ivan Porro, Giuseppe La Rocca, Salvatore Monforte, R. Ricceri, Riccardo Rotondo, Diego Scardaci, Andrea Schenone: The DECIDE Science Gateway. Journal of Grid Computing 10(4): 689-707 (2012) | |||

<br/> | |||

== Available resources == | |||

Current available resources grouped by categories: | |||

* <u>Cloud Resources</u>: | |||

** 246 vCPU cores | |||

** 781 GB of RAM | |||

** 2.1TB of object storage | |||

* | * <u>High-Throughtput Resources</u>: | ||

** 9.5Million HEPSPEC | |||

* | ** 1.4 TB of disk storage | ||

* | |||

* | |||

| | For more details about the resources allocated for supporting this service, please click [[here|here]]. | ||

<br/> | |||

* | == Operational Level Agreements == | ||

* | * EC3: https://documents.egi.eu/document/3370 | ||

* | * FGSG: https://documents.egi.eu/document/2782 | ||

* | * WS-PGRADE/gUSE: https://documents.egi.eu/document/3368 | ||

* Cloud resource providers: https://documents.egi.eu/document/2773 | |||

<br/> | |||

== GGUS Support Units (SUs) == | |||

* FutureGateway Science Gateway (FGSG): | |||

** The FGSG GGUS SU is available under the Core Services category | |||

* Elastic Cloud Computing Cluster (EC3): | |||

** The EC3 GGUS SU is available under the Core Services category | |||

* WS-PGRADE/gUSE portal: | |||

** The WS-PGRADE/gUSE GGUS SU is available under the Core Services category | |||

* The EGI Notebooks: | |||

** The EGI Notebooks GGUS SU is available under the EGI Notebooks category | |||

* The EGI VMOps dashboard: | |||

** The AppDB GGUS SU is available under the Core Services category | |||

<br/> | |||

== Links for administrators == | |||

User approval: | |||

* VO membership management interface in PERUN: https://perun.metacentrum.cz/cert/gui/ | |||

* To register in the VO (relevant for Science Gateways robot certificates and for support staff): https://perun.metacentrum.cz/cert/registrar/?vo=vo.access.egi.eu | |||

<br/> | |||

* | |||

*VO | |||

</ | |||

== Monitoring == | |||

* The EGI Applications on Demand service components have been registered in the [http://gocdb.egi.eu/ GOCDB] and connected with the EGI monitoring system based on [http://argo.egi.eu ARGO]. | |||

* By default ARGO automatically gathered the services endpoints from the GOCDB and implements simple 'https' checks using standard NAGIOS probes. If necessary new ones can be easily developed and added. | |||

* The following service components are monitored by ARGO: | |||

** EGI-FGSG, | |||

** EGI-NOTEBOOKS, | |||

** GRIDOPS-WS-PGRADE, | |||

** GRIDOPS-APPDB, and | |||

** GRIDOPS-EC3 | |||

* To monitor the service components, check the following [http://argo.egi.eu/egi/OPS-MONITOR-Critical ARGO] report page. | |||

<br/> | |||

== Accounting == | |||

* Accounting data about the VO users can be checked here: https://accounting.egi.eu/ | |||

* From the EGI Accounting Portal it is possible to check the accounting metrics generated for both grid- and cloud-based resources supporting the vo.access.egi.eu VO. | |||

* From the top-menu click on <u>'Restrict View'</u> and <u>'VO Admin'</u> to check the accounting data of platform users. | |||

[[Image:EGI_AoDs_Accounting.png|center|800px|EGI_AoDs_Accounting.png]] | |||

* | == Policies & documents == | ||

* | *[https://documents.egi.eu/public/ShowDocument?docid=2635 Acceptable Use Policy (AUP) and Conditions of Use of the 'EGI Applications on Demand (AoD) service']<br/> | ||

*[https://documents.egi.eu/public/ShowDocument?docid=2734 EGI Applications on Demand (AoD) service Security Policy]<br/> | |||

*[https://documents.egi.eu/public/ShowDocument?docid=3127 Doc vetting manual for the EGI Support team] | |||

<br/> | |||

== Useful Links == | |||

* VO ID card: http://operations-portal.egi.eu/vo/view/voname/vo.access.egi.eu | |||

* Name: <code>vo.access.egi.eu</code> | |||

* Scope: Global | |||

* Disciplines: Support Activities | |||

* VOMS servers: voms1/voms2.grid.cesnet.cz | |||

* VO Membership Management: https://perun.metacentrum.cz/perun-registrar-cert/?vo=vo.access.egi.eu | |||

* Contacts: | |||

** EGI Support Team: applications-platform-support@mailman.egi.eu | |||

** Managers: | |||

*** Giuseppe La Rocca (<giuseppe.larocca@egi.eu>) | |||

*** Diego Scardaci (<diego.scardaci@egi.eu>) | |||

*** Gergely Sipos (<gergely.sipos@egi.eu>) | |||

<br/> | |||

== Roadmap == | |||

*<strike>Integration of the JupyterHub as a Service (mid 2018)</strike> | |||

*<strike>Upgrade of the CSG to Liferay 7 to use the Future Gateway (FG) API server developed in the context of the INDIGO-DataCloud project (2019)</strike> | |||

*<strike>Integration the open-source serverless Computing Platform for Data-Processing Applications</strike> | |||

*<strike>Improve the user's experience in the EC3 portal (mid 2018)</strike> | |||

*<strike>Integrate the HNSciCloud voucher schemes in the EC3 portal (2018)</strike> | |||

*<strike>Joined the IN2P3-IRES cloud provider in the vo.access.egi.eu VO (2019)</strike> | |||

*<strike>Configure no.1 instance of PROMINENCE service for the vo.access.egi.eu VO (2019)</strike> | |||

*<strike>Create an Ansible receipt to run big data workflows with ECAS/Ophidia framework in EGI (2019)</strike> | |||

*<strike>Agree OLAs with additional cloud providers of the EGI Federation (2019)</strike> | |||

<!-- | |||

= Roadmap = | = Roadmap = | ||

| Line 286: | Line 173: | ||

[https://goc.egi.eu/portal/index.php?Page_Type=Site&id=1525 GRIDOPS-LTOS]<br> | [https://goc.egi.eu/portal/index.php?Page_Type=Site&id=1525 GRIDOPS-LTOS]<br> | ||

[https://goc.egi.eu/portal/index.php?Page_Type=Site&id=1585 GRIDOPS-WS-PGRADE]<br> | [https://goc.egi.eu/portal/index.php?Page_Type=Site&id=1585 GRIDOPS-WS-PGRADE]<br> | ||

[https://goc.egi.eu/portal/index.php?Page_Type=Site&id=1805 GRIDOPS-EC3]<br> | |||

| DONE | | DONE | ||

| Line 636: | Line 525: | ||

| <br> | | <br> | ||

| <br> | | <br> | ||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://ggus.eu/index.php?mode=ticket_info&ticket_id=125633 <strike>LToS Long-tail-user-requests missing information in new affiliation email</strike>]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| DONE | |||

|- | |||

| <br> | |||

| [https://ggus.eu/index.php?mode=ticket_info&ticket_id=125643 LToS admin portal fake profile]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://ggus.eu/index.php?mode=ticket_info&ticket_id=125644 LToS admin portal logout impossible]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://rt.egi.eu/rt/Ticket/Display.html?id=12233 LToS admin portal]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://ggus.eu/index.php?mode=ticket_info&ticket_id=125638 LToS admin portal misleading icons]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://rt.egi.eu/rt/Ticket/Display.html?id=12389 Edit approved resource allocation requests]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | |||

| | |||

|- | |||

| <br> | |||

| [https://rt.egi.eu/rt/Ticket/Display.html?id=12234 LToS admin portal information missing to validate applicants]<br> | |||

| High<br> | |||

| Roksana<br> | |||

| <br> | |||

| Started<br> | |||

| <br> | | <br> | ||

| | | | ||

|} | |} | ||

--> | |||

Latest revision as of 10:19, 23 July 2021

| Applications on Demand Service menu: | Home • | Documentation for providers • | Documentation for developers • | Architecture |

Applications on Demand (AoD) Service Information pages

The EGI Applications on Demand service (AoD) is the EGI’s response to the requirements of researchers who are interested in using applications in a on-demand fashion together with the compute and storage environment needed to compute and store data.

The Service can be accessed through the EGI Marketplace

Service architecture

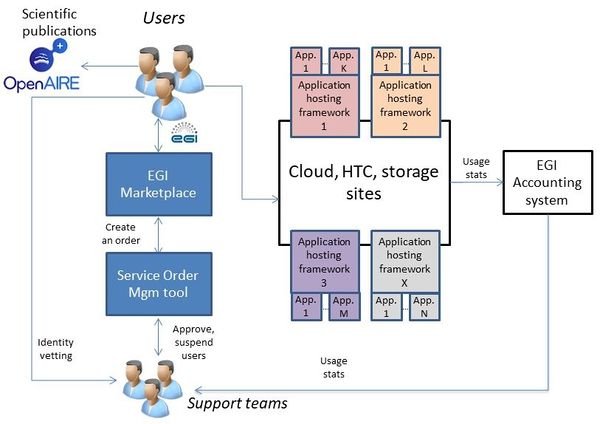

The EGI Applications on Demand service architecture is presented in Figure.

This service architecture is composed by the following components:

- A catch-all VO called ‘vo.access.egi.eu’ and a pre-allocated pool of HTC and cloud resources configured for supporting the EGI Applications on Demand research activities. This resource pool currently includes cloud resources from Italy (INFN-Catania), Spain (CESGA) and France (IN2P3-IRES).

- The X.509 credentials factory service (also called eToken server)[1] is a standard-based solution developed for central management of robot certificates and the provision of Per-User Sub-Proxy (PUSP) certificates. PUSP allows to identify the individual user that operates using a common robot certificate. Permissions to request robot proxy certificates, and contact the server, are granted only to Science Gateways/portal integrated in the Platform and only when the user is authorized.

- A set of Science Gateways/portal to host applications that users can run accessing the EGI Applications on Demand service. Currently the following three frameworks are used: The FutureGateway Science Gateway (FGSG), the WS-PGRADE/gUSE portal and the Elastic Cloud Computing Cluster (EC3).

- The EGI VMOps dashboard provides a Graphical User Interface (GUI) to perform Virtual Machine (VM) management operations on the EGI Federated Cloud. The dashboard introduces a user-friendly environment where users can create and manage VMs along with associated storage devices on any of the EGI Federated Cloud providers. It provides a complete view of the deployed applications and a unified resource management experience, independently of the technology driving each of the resource centres of the federation. Users can create new infrastructure topologies, which include a set of VMs, their associated storage and contextualization, a wizard-like builder that guides them through the selection of the virtual appliances, virtual organisation, resource provider, and the final customisation of the VMs that will be deployed. Its tight integration with the AppDB Cloud Marketplace allows for an automatic discovery of the appliances which are supported at each resource provider Once a topology has been created, VMOps allows management actions to be applied both on the set of VMs comprising a topology and on fine-grained actions on each individual VM.

- The EGI Notebooks, build on top of JupyterHub offers an open-source web application where users can create and share documents that contain live code, equations, visualization and explanatory text.

[1] Valeria Ardizzone, Roberto Barbera, Antonio Calanducci, Marco Fargetta, E. Ingrà, Ivan Porro, Giuseppe La Rocca, Salvatore Monforte, R. Ricceri, Riccardo Rotondo, Diego Scardaci, Andrea Schenone: The DECIDE Science Gateway. Journal of Grid Computing 10(4): 689-707 (2012)

Available resources

Current available resources grouped by categories:

- Cloud Resources:

- 246 vCPU cores

- 781 GB of RAM

- 2.1TB of object storage

- High-Throughtput Resources:

- 9.5Million HEPSPEC

- 1.4 TB of disk storage

For more details about the resources allocated for supporting this service, please click here.

Operational Level Agreements

- EC3: https://documents.egi.eu/document/3370

- FGSG: https://documents.egi.eu/document/2782

- WS-PGRADE/gUSE: https://documents.egi.eu/document/3368

- Cloud resource providers: https://documents.egi.eu/document/2773

GGUS Support Units (SUs)

- FutureGateway Science Gateway (FGSG):

- The FGSG GGUS SU is available under the Core Services category

- Elastic Cloud Computing Cluster (EC3):

- The EC3 GGUS SU is available under the Core Services category

- WS-PGRADE/gUSE portal:

- The WS-PGRADE/gUSE GGUS SU is available under the Core Services category

- The EGI Notebooks:

- The EGI Notebooks GGUS SU is available under the EGI Notebooks category

- The EGI VMOps dashboard:

- The AppDB GGUS SU is available under the Core Services category

Links for administrators

User approval:

- VO membership management interface in PERUN: https://perun.metacentrum.cz/cert/gui/

- To register in the VO (relevant for Science Gateways robot certificates and for support staff): https://perun.metacentrum.cz/cert/registrar/?vo=vo.access.egi.eu

Monitoring

- The EGI Applications on Demand service components have been registered in the GOCDB and connected with the EGI monitoring system based on ARGO.

- By default ARGO automatically gathered the services endpoints from the GOCDB and implements simple 'https' checks using standard NAGIOS probes. If necessary new ones can be easily developed and added.

- The following service components are monitored by ARGO:

- EGI-FGSG,

- EGI-NOTEBOOKS,

- GRIDOPS-WS-PGRADE,

- GRIDOPS-APPDB, and

- GRIDOPS-EC3

- To monitor the service components, check the following ARGO report page.

Accounting

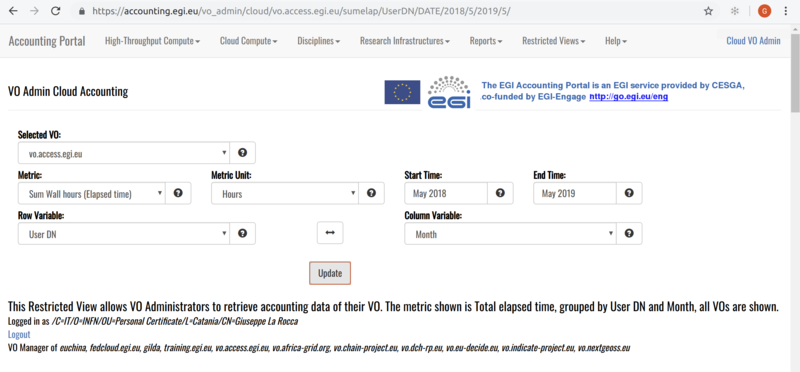

- Accounting data about the VO users can be checked here: https://accounting.egi.eu/

- From the EGI Accounting Portal it is possible to check the accounting metrics generated for both grid- and cloud-based resources supporting the vo.access.egi.eu VO.

- From the top-menu click on 'Restrict View' and 'VO Admin' to check the accounting data of platform users.

Policies & documents

- Acceptable Use Policy (AUP) and Conditions of Use of the 'EGI Applications on Demand (AoD) service'

- EGI Applications on Demand (AoD) service Security Policy

- Doc vetting manual for the EGI Support team

Useful Links

- VO ID card: http://operations-portal.egi.eu/vo/view/voname/vo.access.egi.eu

- Name:

vo.access.egi.eu - Scope: Global

- Disciplines: Support Activities

- VOMS servers: voms1/voms2.grid.cesnet.cz

- VO Membership Management: https://perun.metacentrum.cz/perun-registrar-cert/?vo=vo.access.egi.eu

- Contacts:

- EGI Support Team: applications-platform-support@mailman.egi.eu

- Managers:

- Giuseppe La Rocca (<giuseppe.larocca@egi.eu>)

- Diego Scardaci (<diego.scardaci@egi.eu>)

- Gergely Sipos (<gergely.sipos@egi.eu>)

Roadmap

Integration of the JupyterHub as a Service (mid 2018)Upgrade of the CSG to Liferay 7 to use the Future Gateway (FG) API server developed in the context of the INDIGO-DataCloud project (2019)Integration the open-source serverless Computing Platform for Data-Processing ApplicationsImprove the user's experience in the EC3 portal (mid 2018)Integrate the HNSciCloud voucher schemes in the EC3 portal (2018)Joined the IN2P3-IRES cloud provider in the vo.access.egi.eu VO (2019)Configure no.1 instance of PROMINENCE service for the vo.access.egi.eu VO (2019)Create an Ansible receipt to run big data workflows with ECAS/Ophidia framework in EGI (2019)Agree OLAs with additional cloud providers of the EGI Federation (2019)